- Flipped.ai Newsletter

- Posts

- Meet the 671B beast: Deep Cogito v2’s self-improving AI.

Meet the 671B beast: Deep Cogito v2’s self-improving AI.

From math to legal logic, this open-source model family is reshaping how AI thinks—and learns to think better.

Transform your hiring with Flipped.ai – the hiring Co-Pilot that's 100X faster. Automate hiring, from job posts to candidate matches, using our Generative AI platform. Get your free Hiring Co-Pilot.

Dear Reader,

What if building AI that doesn’t just think but learns to think better was possible—and open for anyone to use?

Flipped.ai’s weekly newsletter is read by more than 75,000 professionals, entrepreneurs, decision-makers, and investors around the world.

This week, we’re diving into Deep Cogito v2—a groundbreaking open-source AI model family redefining reasoning itself. From solving complex math to legal logic and even image reasoning, these models rival top proprietary systems like Claude 4 Opus—yet were trained at a fraction of the cost.

The best HR advice comes from people who’ve been in the trenches.

That’s what this newsletter delivers.

I Hate it Here is your insider’s guide to surviving and thriving in HR, from someone who’s been there. It’s not about theory or buzzwords — it’s about practical, real-world advice for navigating everything from tricky managers to messy policies.

Every newsletter is written by Hebba Youssef — a Chief People Officer who’s seen it all and is here to share what actually works (and what doesn’t). We’re talking real talk, real strategies, and real support — all with a side of humor to keep you sane.

Because HR shouldn’t feel like a thankless job. And you shouldn’t feel alone in it.

Meet Deep Cogito v2: The self-improving AI family

Deep Cogito, a San Francisco-based startup founded by ex-Googlers, has dropped four new open-source AI models under the Cogito v2 banner. These aren’t your average language models—they’re built to sharpen their own reasoning skills, making them smarter and faster over time. Here’s the lineup:

Cogito v2-70B (Dense): Predictable and perfect for low-latency apps.

Cogito v2-109B (MoE): A sparse, efficient model for complex tasks.

Cogito v2-405B (Dense): High performance for varied hardware.

Cogito v2-671B (MoE): The flagship beast, rivaling top proprietary models like Claude 4 Opus and O3.

The star of the show? The 671B Mixture-of-Experts (MoE) model, which is already being called one of the most powerful open-source AIs out there. Available on platforms like Hugging Face, Unsloth, and APIs from Together AI, Baseten, and RunPod, these models are ready for developers and enterprises to explore.

Performance of Cogito 70B

Why is it a big deal?

Unlike traditional AI that grinds through long reasoning chains, Cogito v2 models have a knack for “machine intuition.” They don’t just search for answers—they anticipate them, cutting reasoning steps by 60% compared to rivals like DeepSeek R1. Plus, Deep Cogito built this entire lineup for just $3.5 million, a fraction of the budgets of big players like OpenAI. Efficiency? Check. Innovation? Double-check.

The secret sauce: Iterated distillation and amplification (IDA)

So, how does Cogito v2 pull off this reasoning magic? Enter Iterated Distillation and Amplification (IDA), a technique that’s like teaching the AI to trust its gut. Here’s the gist:

During training, the models run reasoning chains and learn from their own thought processes.

These insights are distilled back into the model’s core, building a stronger “intuition” for problem-solving.

The result? Shorter, smarter reasoning paths that get to the answer faster without wandering.

Think of it like an AI that’s been to therapy—it knows itself better and doesn’t overthink. This approach makes Cogito v2 models faster, cheaper to run, and scarily good at tasks like math, legal reasoning, and even image analysis (more on that later!).

Performance of Cogito 109B MoE

Standout features of Cogito v2

1. Hybrid reasoning power

Cogito v2 models are like Swiss Army knives—they can answer instantly or pause to reflect when needed. This flexibility makes them perfect for everything from quick chatbot responses to deep, complex problem-solving. And because their reasoning is baked into their training, they’re efficient even in “standard” mode.

2. Image reasoning (Yes, really!)

Here’s where it gets wild: Cogito v2 wasn’t explicitly trained to reason about images, but it does it anyway. In one test, the 671B model compared images of a duck and a lion, analyzing their habitats, colors, and composition like a pro. This “emergent property” could be a goldmine for future multimodal AI systems.

3. Budget-friendly innovation

Deep Cogito trained all four models (plus earlier versions) for under $3.5 million. Compare that to the $100 million+ budgets of some frontier models, and it’s clear they’re doing more with less. By focusing on smarter reasoning rather than brute-force data, they’re keeping costs low and performance high.

4. Quantized for accessibility

Want to run a massive 671B model on less powerful hardware? No problem! Deep Cogito offers an 8-bit floating point (FP8) version that shrinks the model’s size while keeping 95-99% of its performance. This makes it easier for developers to deploy these models without needing a supercomputer.

Performance of Cogito 405B

Real-world wins: How Cogito v2 shines

Let’s get practical—how does this tech actually perform? Here are a few examples that’ll make your jaw drop:

Math made simple: Asked if a train going 80 mph can reach a city 240 miles away in under 2.5 hours, Cogito 671B quickly calculates 240 ÷ 80 = 3 hours and says, “Nope, too slow!” It does this with a reasoning trace of under 100 tokens, while DeepSeek R1 takes over 200.

Legal reasoning: When tasked with applying a U.S. Supreme Court ruling to a hypothetical case, Cogito v2 breaks it down into clear, logical steps, delivering nuanced answers that rival human experts.

Handling ambiguity: In a classic puzzle—“If Alice is Bob’s mother, and Bob is Charlie’s father, what is Alice to Charlie?”—Cogito v2 nails it: Alice is Charlie’s grandmother, even when the question gets tricky.

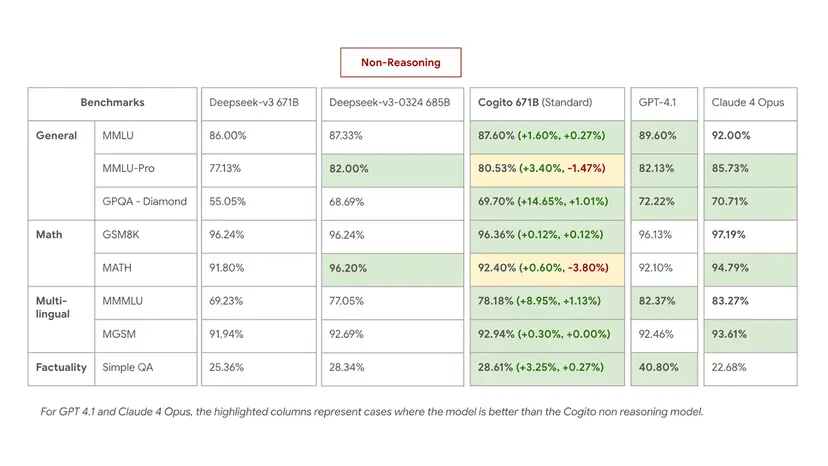

Performance of Cogito 671B MoE in Non-Reasoning

These models don’t just answer—they think like pros, making them a dream for AI developers tackling real-world challenges.

The bigger picture: Open-source and ambitious

Deep Cogito isn’t just about building cool models; they’re on a mission to create superintelligence through iterative self-improvement. Their “hill climbing” approach means each model learns from the last, getting smarter with every release. And the best part? Every model is open-source, so developers and researchers worldwide can jump in and build on this tech.

Founded by Drishan Arora, a former Google LLM engineer, Deep Cogito emerged from stealth in April 2025 after a $13 million seed round led by Benchmark. With partners like Hugging Face and Meta’s Llama team, they’re already making waves in the AI community.

Performance of Cogito 671B MoE in Reasoning

What’s next for Deep Cogito?

The Cogito v2 release is just the beginning. The team plans to keep refining their models, pushing the boundaries of AI reasoning while staying true to their open-source roots. For AI professionals, this means more tools to play with, from fine-tuning on Hugging Face to running models locally via Unsloth.

Why should you care?

If you’re an AI developer, researcher, or enthusiast, Cogito v2 is your chance to work with cutting-edge models that rival proprietary giants—without the massive price tag. Whether you’re building apps, solving complex problems, or just curious about AI’s future, these models offer a glimpse into a world where machines don’t just think—they learn to think better.

Ready to explore? Head to Hugging Face or Unsloth to download the models, or check out APIs from Together AI, Baseten, or RunPod. Let’s shape the future of AI together!

Stay curious.

Want to get your product in front of 75,000+ professionals, entrepreneurs, decision-makers, and investors around the world? 🚀

If you are interested in sponsoring, contact us at [email protected].

Thank you for being part of our community, and we look forward to continuing this journey of growth and innovation together!

Best regards,

Flipped.ai Editorial Team