- Flipped.ai Newsletter

- Posts

- Google sees what you see

Google sees what you see

Transform your hiring with Flipped.ai – the hiring Co-Pilot that's 100X faster. Automate hiring, from job posts to candidate matches, using our Generative AI platform. Get your free Hiring Co-Pilot.

Dear Reader,

Flipped.ai’s weekly newsletter is read by more than 75,000 professionals, entrepreneurs, decision-makers, and investors around the world.

In this newsletter, we’re excited to share that Google's revolutionary AI Mode update, which now features powerful multimodal search capabilities. Discover how this technology allows users to ask complex questions about images, combining Google Lens's visual recognition with Gemini AI's reasoning abilities. Learn about real-world applications, implications for different stakeholders, and how this advancement signals a fundamental shift in how we interact with visual information online. Stay ahead of the curve with our comprehensive analysis of this game-changing search technology.

Before, we dive into our newsletter, checkout our sponsor for this newsletter.

You’ve heard the hype. It’s time for results.

After two years of siloed experiments, proofs of concept that fail to scale, and disappointing ROI, most enterprises are stuck. AI isn't transforming their organizations — it’s adding complexity, friction, and frustration.

But Writer customers are seeing positive impact across their companies. Our end-to-end approach is delivering adoption and ROI at scale. Now, we’re applying that same platform and technology to build agentic AI that actually works for every enterprise.

This isn’t just another hype train that overpromises and underdelivers. It’s the AI you’ve been waiting for — and it’s going to change the way enterprises operate. Be among the first to see end-to-end agentic AI in action. Join us for a live product release on April 10 at 2pm ET (11am PT).

Can't make it live? No worries — register anyway and we'll send you the recording!

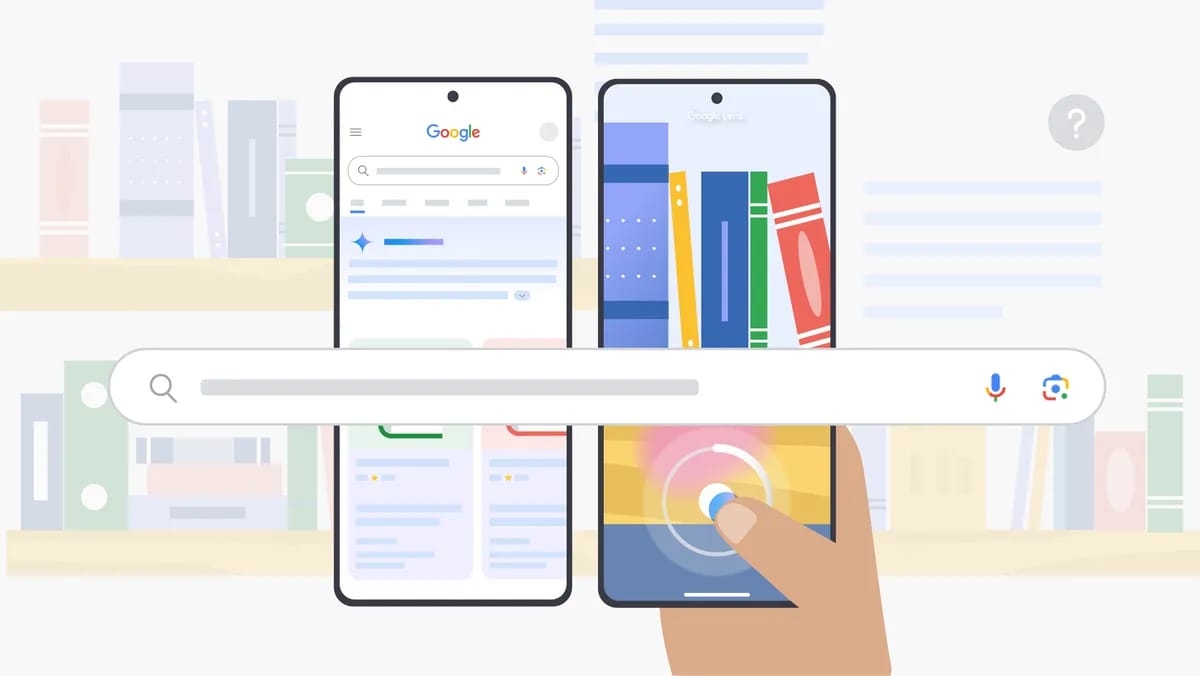

Google expands AI mode with powerful visual search

Google has significantly upgraded its AI Mode in Search with groundbreaking multimodal capabilities, allowing users to ask complex questions about images. Previously available only to Google One AI Premium subscribers paying $20 monthly, this powerful feature is now rolling out to millions more Labs users in the United States, making advanced AI search more accessible than ever.

The new feature represents a major evolution in how we interact with visual information online, combining the visual recognition capabilities of Google Lens with the reasoning power of Google's Gemini AI model. This update signals Google's commitment to maintaining its leadership in search technology amid growing competition from AI-native search alternatives.

What exactly is google's AI mode?

Before diving into the new capabilities, let's clarify what AI Mode actually is. Launched as part of Google Search Labs, AI Mode represents Google's response to the rising popularity of conversational AI search engines like Perplexity AI and OpenAI's ChatGPT Search.

Unlike traditional Google Search, which presents a list of links in response to typically short keyword queries, AI Mode allows users to:

Ask complex, multi-part questions using natural language

Receive AI-generated responses that synthesize information from across the web

Follow up with additional questions to dig deeper into topics

Compare options, plan activities, and explore topics in greater depth

Google reports that AI Mode queries are typically twice as long as traditional search queries, reflecting users' comfort with asking more detailed questions when interacting with an AI system.

The multimodal revolution: Seeing and understanding

The most significant aspect of this update is the introduction of multimodal capabilities to AI Mode. "Multimodal" refers to the ability to process and understand multiple types of information simultaneously—in this case, both text and images.

How it works: The technology behind visual understanding

Google's new multimodal search in AI Mode operates through a sophisticated multi-step process:

Scene comprehension: Using Gemini's advanced capabilities, AI Mode analyzes the entire image to understand the complete scene, including how objects relate to one another within the visual context.

Object recognition: Drawing on Google Lens technology, the system precisely identifies individual objects within the image, noting their characteristics such as materials, colors, shapes, and arrangements.

Query fan-out: AI Mode then issues multiple queries about both the whole image and the specific objects it contains—essentially asking the questions a human might naturally wonder about what they're seeing.

Information synthesis: Drawing on Google's vast search index, the system gathers more information than a traditional search could provide in a single query.

Contextual response: Finally, AI Mode delivers a nuanced, contextually relevant response that addresses the user's question while providing links to explore specific aspects in greater depth.

This process creates a search experience that feels remarkably intuitive and human-like in its understanding of visual information.

Real-world applications: How users are leveraging multimodal AI mode

The applications for this technology are extensive and growing as users discover new ways to interact with images. Here are some key use cases emerging from early adopters:

Product research and recommendations

One of the most compelling examples provided by Google involves taking a photo of a bookshelf and asking, "If I enjoyed these, what are some similar books that are highly rated?" AI Mode identifies each book, understands your taste based on the collection, and provides personalized recommendations with links to learn more or make purchases.

This same approach works for other product categories—from fashion to electronics to home decor—allowing users to find similar items or get information about products they encounter in the real world.

Educational support

Students and lifelong learners can snap photos of complex diagrams, charts, or natural phenomena and ask specific questions to deepen their understanding. Rather than typing out lengthy descriptions of what they're seeing, they can simply show the AI and inquire directly.

Open-ended questions. Google

Travelers can photograph landmarks, restaurant menus in foreign languages, or local attractions and immediately receive detailed information, historical context, or recommendations for similar experiences nearby.

DIY and home improvement

When facing an unfamiliar tool, component, or household repair situation, users can photograph the item and ask specific questions about how to use it, fix it, or replace it—receiving step-by-step guidance that's contextually relevant to their exact situation.

Shopping and price comparison

By taking photos of products while shopping, users can quickly compare prices, check reviews, and find alternative options—all through a natural conversational interface rather than juggling multiple search queries.

The user experience: Conversational, contextual, and comprehensive

What makes AI Mode particularly powerful is how it transforms the search experience from transactional to conversational. Users aren't limited to a single query but can ask follow-up questions to refine their search.

For instance, after receiving book recommendations based on their bookshelf photo, a user might ask, "I'm looking for a quick read. Which one of these recommendations is the shortest?" AI Mode maintains context throughout the conversation, delivering increasingly personalized and relevant responses.

The interface remains clean and intuitive, focusing on providing comprehensive answers while still offering traditional web links for those who want to explore specific aspects in greater depth.

How google AI mode compares to competitors

Google's enhancement of AI Mode comes amid increasing competition in the AI-powered search space. Here's how it stacks up against its main rivals:

vs. OpenAI's ChatGPT search

While ChatGPT offers powerful conversational capabilities, Google's integration with its vast search index gives AI Mode access to more current and comprehensive information. Additionally, Google's decades of experience with image search technology provides an edge in visual understanding and recognition accuracy.

vs. Perplexity AI

Perplexity has gained popularity for its clean interface and citation-focused approach. However, Google's multimodal capabilities now push beyond what Perplexity currently offers in terms of visual analysis and understanding.

vs. Traditional search

Compared to standard Google Search, AI Mode represents a fundamentally different approach to information retrieval—focusing on answering complex questions directly rather than simply providing links. The addition of multimodal capabilities further widens this gap by allowing users to search with images in ways that traditional search engines can't match.

What this means for different stakeholders

The implications of Google's multimodal AI Mode extend beyond just a new search feature. Let's examine what this means for different groups:

1. For everyday users

The new capabilities democratize access to advanced AI search technology, making it easier to get answers to complex questions without needing to be an expert in crafting perfect search queries. The visual search capabilities particularly benefit those who encounter unfamiliar objects or situations and need immediate information.

Comparing products. Google

2. For content creators and SEO professionals

The rise of AI-powered search fundamentally changes how content is discovered and consumed. Traditional SEO strategies focused solely on keywords may become less effective as users phrase queries as natural questions and AI systems evaluate content based on how well it actually answers those questions.

Visual content becomes increasingly important as Google gets better at understanding images and incorporating visual information into search results. Content creators should consider:

Creating more visual content with clear, identifiable objects and scenes

Ensuring images are properly labeled with alt text and contextual information

Developing content that answers complex, multi-part questions comprehensively

3. For e-commerce and retail

Product visibility in search results may increasingly depend on how items appear in images and how well they match specific visual criteria mentioned in queries. Retailers should:

Ensure high-quality, detailed product photography from multiple angles

Include comprehensive product information that addresses common questions

Consider how products appear in real-world contexts where users might photograph them

4. For marketers and advertisers

As search becomes more conversational and visual, marketing strategies need to evolve accordingly. This means:

Developing campaigns that anticipate and answer complex user questions

Creating visual assets that are distinctive and easily recognizable by AI systems

Understanding the new user journey through multimodal search interactions

How to access Google's AI mode

AI Mode remains part of Google's Search Labs program, and access requirements include:

Being located in the United States

Being at least 18 years old

Using the latest version of the Google app or Chrome browser

Having search history turned on

Users can access AI Mode through:

The Google.com homepage (by tapping AI Mode below the search bar)

The Google app

Those not yet enrolled can sign up through Google Labs and will receive an email notification when their access begins.

Looking ahead: The future of multimodal search

Google's integration of multimodal capabilities into AI Mode represents just the beginning of a significant shift in how we interact with search technology. Here are some potential developments we might see in the coming months and years:

Expanded language support

While currently limited to English and U.S. users, we can expect Google to roll out these capabilities to more languages and regions as the technology matures.

Enhanced real-world integration

Future iterations might incorporate augmented reality elements, allowing users to point their cameras at the world around them and receive real-time information overlay about what they're seeing.

More sophisticated visual understanding

As the underlying AI models continue to improve, we'll likely see even more nuanced understanding of visual information, including the ability to recognize emotions, actions, and complex social scenarios in images.

Cross-modal reasoning

Advanced versions may develop better capabilities to reason across different types of information—combining insights from text, images, and potentially even audio to provide more comprehensive answers.

Conclusion: The new visual search paradigm

Google's addition of multimodal capabilities to AI Mode marks a significant evolution in search technology—one that moves us closer to truly intuitive information retrieval. By allowing users to simply show what they're curious about and ask natural questions, Google is removing friction from the search process while simultaneously providing more comprehensive and contextually relevant answers.

This development reflects broader trends in AI toward systems that can process and reason across multiple types of information simultaneously, mirroring how humans naturally perceive and understand the world. As these capabilities continue to expand and improve, we can expect the line between digital and physical information access to blur further.

For users, creators, and businesses alike, now is the time to begin exploring and adapting to this new paradigm of visual, conversational search—one that promises to transform how we discover, learn about, and interact with the world around us.

Forget everything you know about hearing aids

Featuring one of the world's first dual-processing systems that separates speech from background noise, ensuring you never miss a beat, a word, or a moment. Claim your free consultation and join the 400k+ who can hear with crystal clarity thanks to these game-changing hearing aids.

Flipped.ai: Revolutionizing Recruitment with AI

At Flipped.ai, we’re transforming the hiring process with our turbocharged AI recruiter, making recruitment faster and smarter. With features like lightning-fast job matches, instant content creation, CV analysis, and smart recommendations, we streamline the entire hiring journey for both employers and candidates.

For Companies:

Looking to hire top talent efficiently? Flipped.ai helps you connect with the best candidates in record time. From creating job descriptions to making quick matches, our AI-powered solutions make recruitment a breeze.

Sign up now to get started: Company Sign Up

For Job Seekers:

Explore professional opportunities with Flipped.ai! Check out our active job openings and apply directly to find your next career move with ease. Sign up today to take the next step in your journey.

Sign up and apply now: Job Seeker Sign Up

For more information, reach out to us at [email protected].

Want to get your product in front of 75,000+ professionals, entrepreneurs decision makers and investors around the world ? 🚀

If you are interesting in sponsoring, contact us on [email protected].

Thank you for being part of our community, and we look forward to continuing this journey of growth and innovation together!

Best regards,

Flipped.ai Editorial Team