- Flipped.ai Newsletter

- Posts

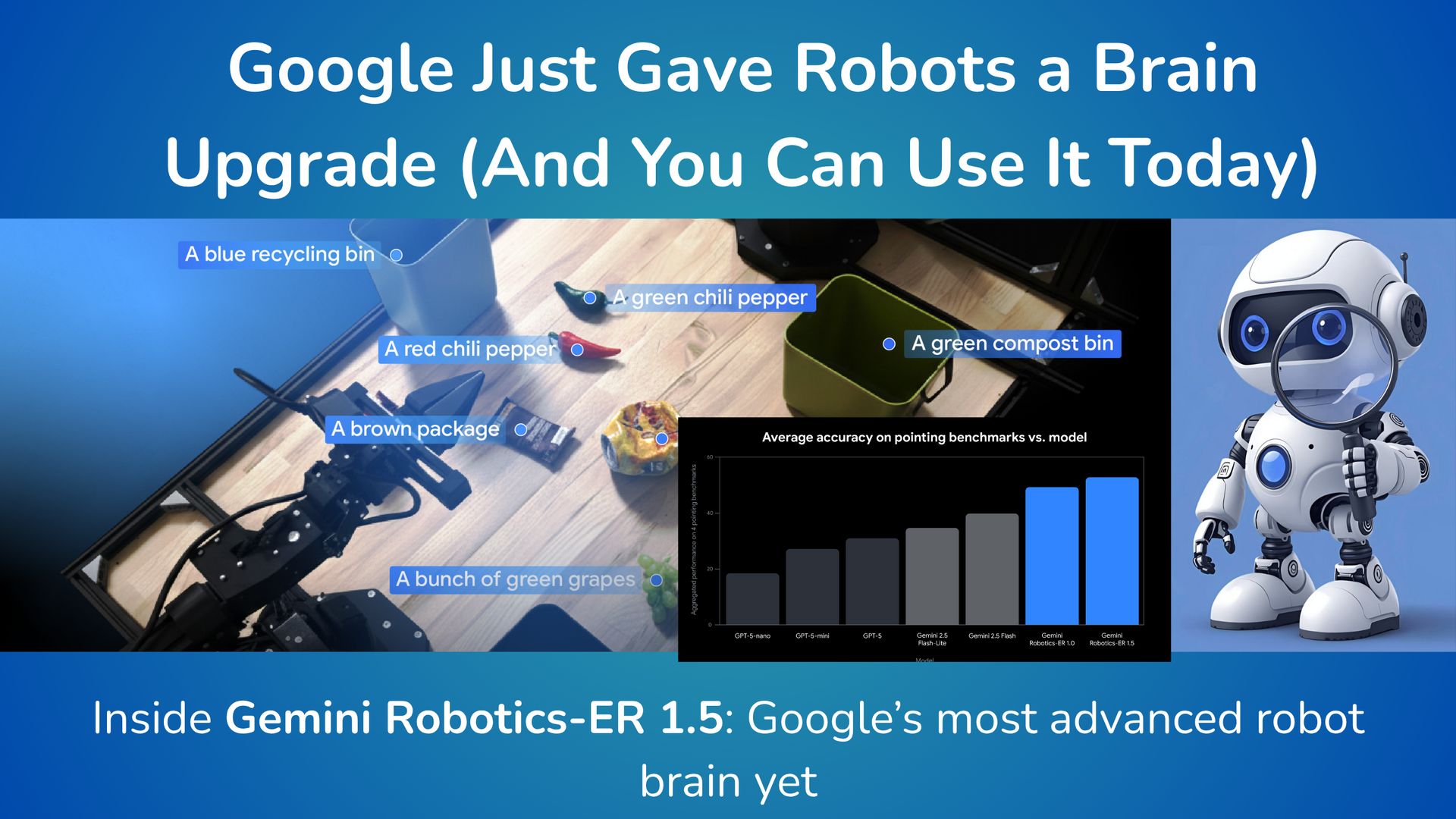

- Google Just Gave Robots a Brain Upgrade (And You Can Use It Today)

Google Just Gave Robots a Brain Upgrade (And You Can Use It Today)

Inside Gemini Robotics-ER 1.5: Google’s most advanced robot brain yet

Transform your hiring with Flipped.ai – the hiring Co-Pilot that's 100X faster. Automate hiring, from job posts to candidate matches, using our Generative AI platform. Get your free Hiring Co-Pilot.

Dear Reader,

What if robots could think, plan, and act with the reasoning skills of a human engineer, identifying objects, making decisions, and adapting to real-world challenges without breaking a sweat?

Flipped.ai’s weekly newsletter reaches over 75,000 professionals, innovators, and decision-makers worldwide.

This week, we’re diving into Google’s latest breakthrough: Gemini Robotics-ER 1.5, a model that gives robots something they’ve never truly had before, the ability to reason about the physical world. Think of it as a brain upgrade that transforms clumsy machines into strategic problem-solvers.

And the best part? Developers can start building with it today through Google AI Studio and the Gemini API.

The robotics revolution isn’t on the horizon. It’s already here, and it’s about to change how we build, work, and live.

Before we dive in, a quick thank you to our sponsor, Speedeon Data.

Where to find high-intent holiday shoppers

Let’s be real: most brands are targeting the same people this holiday season. “Shoppers 25–54 interested in gifts.” Sound familiar? That's why CPMs spike and conversion rates tank.

Speedeon’s Holiday Audience Guide breaks down the specific digital audience segments that actually perform—from early-bird deal hunters actively comparing prices to last-minute panic buyers with high purchase intent. These aren't demographic guesses.

And our behavioral audiences are built on actual shopping signals and real-world data —the same approach we use for clients like FanDuel and HelloFresh."

You'll get the exact audiences, when to deploy them, which platforms work best, and what kind of performance to expect.

Download the guide and get smarter about your holiday targeting before the holiday rush hits.

What makes this actually revolutionary?

Look, we've all seen flashy robot demos that fall apart in real-world scenarios. But this is different. Here's why:

It thinks like we do (but for robots).

Imagine asking a robot to "sort this pile into compost, recycling, and trash." Sounds simple, right?

Wrong.

That robot needs to:

Google your local recycling rules.

Identify every object in the pile.

Figure out which bin each item goes into.

Actually execute the plan without making a mess.

Gemini Robotics-ER 1.5 handles all of that. It's the first model specifically designed for this kind of "embodied reasoning," basically, thinking that understands the physical world and can act on it.

Four game-changing capabilities you need to know

1. Lightning-fast spatial understanding

This model can look at a scene and instantly tell you the precise location of objects – down to pixel-perfect 2D coordinates. And it does this at Flash model speeds, which means it's both accurate AND fast.

Ask it to "point at anything you can pick up," and it'll identify every graspable object in view, considering size, weight, and whether your robot can actually handle it. No more guessing games.

2. Actually useful agentic behavior

Here's where it gets really interesting. You can give it a complex command like "reorganize my desk to match this photo," and it'll:

i) Break down the task into logical steps

ii) Call the right tools (Google Search, hardware APIs, specialized models)

iii) Monitor its own progress

iv) Adjust the plan if something goes wrong

It's not just following rigid instructions – it's orchestrating a whole workflow.

3. You control the "Thinking Time"

This feature is chef's kiss. 🤌

You can now adjust how long the model spends reasoning about a task:

i) Quick reaction needed? Dial down the thinking budget for instant responses

ii) Complex multi-step assembly? Let it think longer for better accuracy

It's like having a volume knob for robot intelligence. Simple tasks get quick answers; complex problems get the deep thinking they deserve.

4. Built-in safety that actually works

The model has been trained to recognize and refuse dangerous or physically impossible tasks. Ask it to lift something beyond your robot's weight capacity? It'll tell you no.

It's also been tested against safety benchmarks like ASIMOV to ensure it won't generate harmful plans. (Though Google rightly points out that this doesn't replace proper robotics safety engineering, you still need emergency stops and collision avoidance!)

See it in action: Real examples

The coffee shop test

Show it a coffee machine and ask, "Where should I put my mug to make coffee?" It'll identify the exact spot with precise coordinates, understanding the workflow of actually making coffee.

The time traveler

Feed it a video of robotic arms organizing objects, then ask what happened between specific timestamps. It'll give you a second-by-second breakdown of every action, understanding cause and effect in the physical world.

One tester showed it a video of arms sorting pens and markers. The model accurately described: "From 00:15 to 00:22, the left arm picks up the blue pen and places it in the mesh cup." That's not just seeing; that's understanding.

The organizer

Ask it to sort trash into bins following local rules. It'll search for the regulations, identify each object, explain its reasoning, and provide the exact coordinates for where to place each item. All in one go.

What this means for you?

If you're building robotics applications, this changes the game:

No more hardcoding every scenario – the model reasons through novel situations

Faster development cycles – less time wrestling with perception pipelines

More capable robots – handle real-world complexity instead of controlled demos

Flexible deployment – adjust performance vs. speed based on your use case

Getting your hands dirty

Ready to experiment? Here's how to dive in:

Google AI Studio – Start experimenting with the model through a visual interface

Gemini API – Integrate directly into your applications

Colab Notebook – See practical implementations and code examples

Developer Docs – Full quickstart guides and API references

The model is in preview now, so you're getting in on the ground floor.

The bigger picture

This isn't just a standalone model. It's part of Google's broader Gemini Robotics system, which includes:

Vision-Language-Action models (VLAs) for motor control

Cross-embodiment learning (robots learning from different types of robots)

End-to-end action models

In other words, they're building the complete stack for intelligent robotics. This reasoning model is just the brain; there's a whole body coming too.

What’s next?

The line between research demos and real-world robotics just blurred. Google’s Gemini Robotics-ER 1.5 isn’t a preview of what’s coming someday; it’s a working tool you can build with today.

If you’re in robotics, this could mean faster development, smarter automation, and breakthroughs that were impossible just a year ago. And if you’re simply AI-curious, the Colab notebook alone is worth a look to see the future taking shape in real time.

One thing is clear: the age of reasoning robots has begun, and the entrepreneurs, engineers, and innovators who embrace it first will define what comes next.

So dive in, explore, and start creating. The future of robotics isn’t waiting. Neither should you.

Until next week, keep building what’s next.

Want to get your product in front of 75,000+ professionals, entrepreneurs, decision-makers, and investors around the world? 🚀

If you are interested in sponsoring, contact us at [email protected].

Thank you for being part of our community, and we look forward to continuing this journey of growth and innovation together!

Best regards,

Flipped.ai Editorial Team